Google actually does have some amazing features you might not notice if you don’t look closely and I would discuss one of such features in this article.

What is Google Lens?

Google Lens is an image recognition mobile app developed by Google. First announced during Google I/O in October, 2017. It is designed to bring up relevant information using visual analysis. What this means is simple, instead of typing a word in the space bar, you use the device camera to do the search.

When directing the phone’s camera at an object, Google Lens will attempt to identify the object or read labels and text and show relevant search results and information. For example, when pointing the device’s camera at a Wi-Fi label containing the network name and password, it will automatically connect to the Wi-Fi source that has been scanned. Lens is also integrated with the Google Photos and Google Assistant apps. The service is similar to Google Goggles, a previous app that functioned similarly but with lesser capability. Lens uses more advanced deep learning routines, similar to other apps like Bixby Vision (for Samsung devices released 2016 and after) and Image Analysis Toolset (available on Google Play); artificial neural networks are used to detect and identify objects, landmarks and to improve optical character recognition (OCR) accuracy.

Availability

Google officially launched Google Lens on October 4, 2017 with app previews pre-installed into the Google Pixel 2. In November 2017, the feature began rolling out into the Google Assistant for Pixel and Pixel 2 phones. A preview of Lens has also been implemented into the Google Photos app for Pixel phones. On March 5, 2018 Google officially released Google Lens to Google Photos on non-Pixel phones. Support for Lens in the iOS version of Google Photos was made on March 15, 2018. Beginning in May 2018, Google Lens was made available within Google Assistant on OnePlus and Infinix Note 5 devices, as well as being integrated into camera apps of various Android phones. A standalone Google Lens app was made available on Google Play in June 2018. Device support is limited, although it is not clear which devices are not supported or why. It requires Android Marshmallow (6.0) or newer.

How Does It Work?

It is not rocket science, as all you have to do is simple!

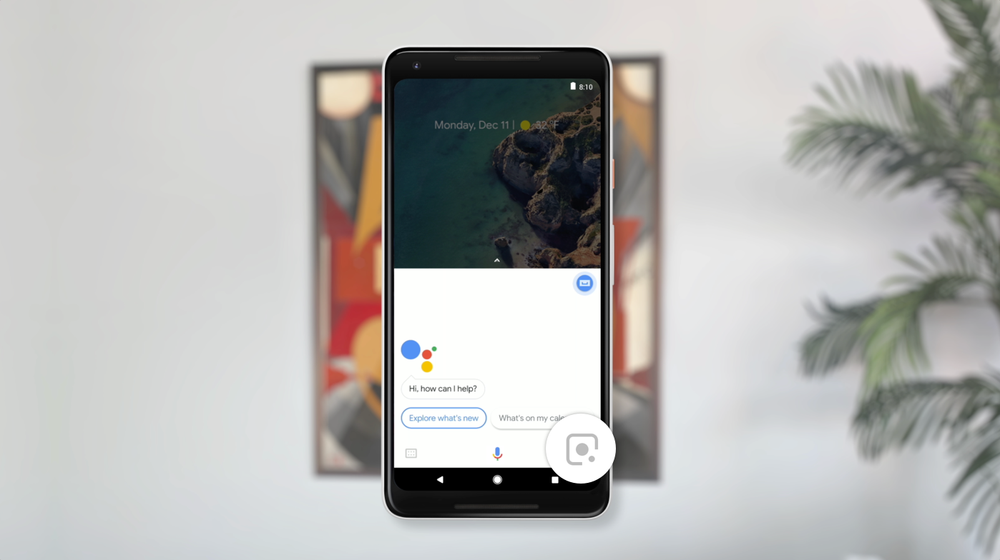

STEP 1: Launch the Google Assistant app by pressing the Home button for like 3 secs. The Assistant pops up with the question “Hi, how can I help?”

STEP 2: You simply ignore the question and click on icon at the bottom right of the screen

STEP 3: The Camera launches and a message is displayed at the bottom of the screen “Tap on objects and text”. This simply means you point the camera on the object or text you want to find out about and tap on it.

STEP 4: Once you tap, the message “Looking for results” displays and a result is later displayed. Then the name of the object or text it captured.